Revolutionizing Filmmaking: The Power of Virtual Production Camera Tracking Systems

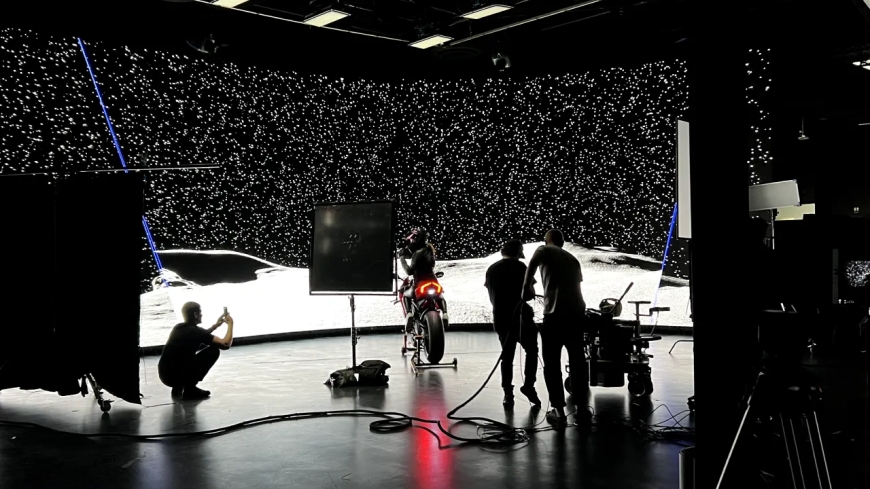

Virtual production has emerged as a transformative force in filmmaking, blending real-world footage with computer-generated environments in real time to create immersive, photorealistic visuals.

Virtual production has emerged as a transformative force in filmmaking, blending real-world footage with computer-generated environments in real time to create immersive, photorealistic visuals. At the heart of this revolution lies the virtual production camera tracking system, a technology that ensures seamless integration between physical camera movements and virtual scenes. By precisely tracking a cameras position, orientation, and lens data, these systems enable filmmakers to craft dynamic, believable worlds without relying on costly physical sets or extensive post-production. In this guest post, well dive into the mechanics, benefits, and future potential of camera tracking systems in virtual production, exploring how theyre reshaping the creative and technical landscape of modern filmmaking.

What Is a Camera Tracking System?

A camera tracking system is a sophisticated tool designed to capture the real-time position, orientation, and lens parameters of a physical camera in a three-dimensional space. This dataoften referred to as 6DoF (six degrees of freedom, covering position and rotation)is fed into a 3D rendering engine, such as Unreal Engine or Unity, to synchronize the perspective of a virtual scene with the live-action cameras movements. The result is a parallax effect, where the virtual background shifts naturally as the camera moves, creating the illusion that the digital environment exists in the physical world.

Camera tracking systems are essential for virtual production workflows, including LED volume stages (like those used in The Mandalorian), green screen setups, and augmented reality (AR) applications. They allow filmmakers to visualize composited footage on set, reducing the need for post-production fixes and enabling real-time creative decision-making.

Types of Camera Tracking Systems

Camera tracking systems fall into two primary categories: optical and mechanical, with some systems combining both for hybrid functionality. Additionally, newer markerless systems leverage advanced technologies like Visual SLAM (Simultaneous Localization and Mapping) for greater flexibility. Heres a breakdown:

-

Optical Tracking Systems: These use cameras, reflective markers, or infrared (IR) sensors to track objects in a scene. Markers are placed on the camera rig or around the set, and high-precision cameras (like those in OptiTrack or ZEISS CinCraft Scenario) detect their movement. Optical systems are highly accurate but may require controlled environments to avoid reflective interference.

-

Mechanical Tracking Systems: These rely on physical components, such as encoded motors or cranes, to track camera movements. Theyre robust for large-scale setups like sports broadcasts but are more expensive and less portable than optical systems.

-

Markerless Tracking Systems: Using technologies like Visual SLAM, markerless systems (e.g., Sony OCELLUS or REtracker Bliss) map the environment in real time without requiring physical markers. They offer greater flexibility for outdoor shoots or dynamic sets but may face challenges with drift or recalibration.

-

Hybrid Systems: Some solutions, like ZEISS CinCraft Scenario, combine marker-based and markerless tracking to balance accuracy and adaptability across diverse environments.

Each system has trade-offs in accuracy, cost, setup time, and compatibility, making the choice dependent on the productions needs.

How Camera Tracking Systems Work in Virtual Production

The workflow of a virtual production camera tracking system involves several key components:

-

Tracking Hardware: This includes sensors, markers, or cameras mounted on the rig or set. For example, OptiTracks CinePuck uses active LED markers synced with cameras for low-latency tracking, while Sonys OCELLUS employs a multi-eye sensor unit for markerless operation.

-

Data Processing: A processing unit aggregates positional data, lens metadata (focus, iris, zoom), and timecode. Systems like EZtracks Hub or Sonys OCELLUS Processing Box integrate this data via protocols like FreeD or LiveLink for compatibility with rendering engines.

-

Rendering Engine Integration: The tracking data is sent to a 3D engine, which renders the virtual scene in real time. Compatibility with engines like Unreal Engine, Zero Density, or Pixotope is critical for seamless operation.

-

Output and Recording: Tracking data can be used live for in-camera VFX or recorded (e.g., in FBX format) for post-production. Systems like OCELLUS support both, streamlining workflows from on-set previs to final compositing.

This process ensures that the virtual environment responds realistically to camera movements, enabling directors and cinematographers to frame shots with precision.

Benefits of Camera Tracking Systems in Virtual Production

The adoption of camera tracking systems has revolutionized virtual production, offering numerous advantages:

1. Real-Time Visualization

Camera tracking allows filmmakers to see the final composite of live-action and CG elements on set. This eliminates guesswork, enabling directors to make immediate creative decisions. For instance, Mo-Sys StarTracker has powered real-time AR graphics in over 100 TV stations worldwide, enhancing live broadcasts.

2. Cost and Time Efficiency

By reducing reliance on physical sets and minimizing post-production fixes, virtual production saves significant time and money. Portable systems like VIVE Mars CamTrack make virtual production accessible even to indie creators, with setups taking minutes instead of hours.

3. Creative Freedom

Camera tracking systems support dynamic camera movementshandheld, Steadicam, cranes, or droneswithout sacrificing precision. Markerless systems like REtracker Bliss allow filmmakers to shoot in diverse environments, from indoor LED volumes to outdoor locations, unlocking new creative possibilities.

4. Enhanced Accuracy

Modern systems deliver sub-millimeter precision, critical for aligning virtual and physical elements. OptiTracks continuous calibration ensures consistent data quality, while ZEISS CinCraft Scenario leverages lens metadata for plug-and-play operation.

5. Versatility Across Applications

Camera tracking systems are used beyond film, in live broadcasts, AR overlays, and virtual events. For example, stYpes Follower Remote enhances FOX News studio visuals, while Antilatencys trackers support multipurpose tracking for cameras, talent, and props.

Challenges and Considerations

Despite their benefits, camera tracking systems face challenges that filmmakers must navigate:

-

Environmental Constraints: Optical systems may struggle with reflective surfaces or low-light conditions, while markerless systems can drift without frequent recalibration.

-

Cost: High-end systems like OptiTrack can be expensive, though budget-friendly options like VIVE Mars CamTrack are emerging.

-

Setup Complexity: Marker-based systems require calibration and marker placement, which can be time-consuming. Markerless systems reduce setup time but may demand more computational power.

-

Integration: Ensuring compatibility with cameras, lenses, and rendering engines is critical. Systems like EZtracks Hub address this by supporting multiple protocols and configurations.

Choosing the right system involves balancing these factors against the productions scale, budget, and creative goals.

Innovations Shaping the Future

The camera tracking system landscape is evolving rapidly, driven by advancements in hardware, software, and AI. Recent innovations include:

-

Markerless Tracking: Sonys OCELLUS and REtracker Bliss use Visual SLAM for flexible, marker-free setups, ideal for outdoor or ad-hoc shoots.

-

Hybrid Solutions: ZEISS CinCraft Scenario combines marker-based and markerless tracking, offering versatility for diverse environments.

-

Portability: Compact systems like Antilatencys trackers and EZtracks Hub enable quick deployment across multiple studios.

-

AI-Driven Calibration: Automated calibration tools, like OptiTracks Continuous Calibration or EZlineup software, reduce operator workload and improve data quality.

-

Integration with VR/AR: Systems like VIVE Mars CamTrack and Antilatency support VR scouting and multipurpose tracking, blending virtual production with immersive experiences.

These advancements are making camera tracking more accessible, accurate, and adaptable, democratizing virtual production for creators at all levels.

Case Studies: Camera Tracking in Action

-

The Mandalorian: OptiTracks Primex 41 cameras tracked the principal cameras movements on the LED volume stage, delivering sub-millimeter precision for seamless in-camera VFX.

-

Rain On Me Music Video: Vanishing Point Vectors real-time tracking enabled live compositing of Lady Gaga and Ariana Grandes performance with virtual backgrounds.

-

Comandante: EZtracks Hub, paired with OptiTrack, facilitated near-real-time post-production by aggregating tracking data from multiple sources during shooting.

These examples highlight how camera tracking systems empower filmmakers to push creative boundaries while streamlining production.

Choosing the Right Camera Tracking System

Selecting a virtual production camera tracking system depends on several factors:

-

Accuracy Needs: High-precision systems like OptiTrack or ZEISS are ideal for large-scale productions, while VIVE Mars CamTrack suits smaller projects.

-

Environment: Markerless systems like Sony OCELLUS excel in dynamic or outdoor settings, while optical systems thrive in controlled studios.

-

Budget: Affordable options like VIVE Mars or Antilatency cater to indie filmmakers, while premium systems like Mo-Sys StarTracker target professional studios.

-

Portability: Lightweight, modular systems like EZtracks Hub are perfect for multi-studio deployments.

-

Integration: Ensure compatibility with your rendering engine, camera rig, and lens ecosystem. Systems like ZEISS CinCraft Scenario simplify this with plug-and-play lens data.

Consulting with experts and testing systems in your workflow is crucial for making an informed choice.

Conclusion

Virtual production camera tracking systems are redefining filmmaking by bridging the gap between real and virtual worlds. From real-time visualization to cost savings and creative freedom, these systems empower filmmakers to tell stories with unprecedented precision and efficiency. As innovations like markerless tracking, AI calibration, and hybrid solutions continue to evolve, camera tracking is becoming more versatile and accessible, opening new doors for creators across industries. Whether youre an indie filmmaker or a Hollywood studio, investing in a robust camera tracking system is a step toward the future of storytelling.